Why We're Going Cognitive

Vision and hearing are your main senses when experiencing a movie. You recognize the actors, you understand the spoken language even if it is not your native language. You follow the story and enjoy the amazing film photo of the different environments – and by sharing all these experiences you can not only relate to the movie itself but also convince your friend to see the movie.

Wouldn’t it be great if your Media Asset Management system could possess similar capabilities when managing your media? Being able to understand the language? Recognize actors, detect and define parts of the image – maybe also differentiate between genres? But how?

In order to do this you need a system that can actually see and listen what’s inside your media – you need a system that has cognitive capabilities like yourself and can store that info in – yes, you guessed right – metadata.

But how do we navigate our vastly growing archives of file-based media?

Media files themselves today includes a lot of metadata already in a descriptive format. In here, there is room for all general metadata as well as technical metadata describing the actual file structures. MAM (Media Asset Management) systems make use of this existing metadata along with additional layers of metadata frameworks to help you navigate, find and tag not only media files themselves but also the time-based intervals of the media.

Because of this, you can argue that the true definition of a media file must include an audio-visual asset AND an associated metadata description. Without one or the other – the asset is not complete.

Cognitive Metadata - "to boldly go where no MAM has gone before"

Traditionally, the common notion is that while a machine can read and act on the associated text-based metadata of a media file, a human can understand the storyline. We can detect lipsync, recognize actors, emotions, and all the visual objects inside a frame. We can also listen to the language spoken, understand the story and do a translation into a new language.

Because of this common view on the differences between machine capabilities and human capabilities, it is still also quite common that production companies and similar, divide many tasks in a media supply chain between man and machine this way.

But times are changing, and they are changing fast. For any Content Owner, CTO or technical strategist building a modern media workflow, it is vital to challenge this traditional view on what machines can and cannot do.

INTERVIEW WITH RALF JANSEN – Product Manager and Software Architect at Arvato Systems / Vidispine

To find out more on this subject, we talked to Ralf Jansen, Product Manager and Software Architect at Vidispine - an Arvato Systems Brand. Ralf Jansen has a strong technical background, finished computer science degree with a Thesis Diploma at Fraunhofer Institute and has since worked as a developer and software architect in the industry for nearly the last 20 years. Today Ralf Jansen is managing the development of the new VidiNet Cognitive Services (VCS) and is part of the VidiNet partner success team.

SO, RALF, WHY IS COGNITIVE SERVICES IMPORTANT?

Cognitive services allow the machine to find information inside the video and audio frame itself, very much like we humans can interpret the same content. This of course opens up important new possibilities depending on what type of workflow you are managing. A channel distributor can use cognitive services to automatically find (new) types of information in a huge amount of media content that could not be processed manually before – and thus use or present that insights to the viewer as a program, highlights, suggested shows or even as autogenerated trailers. Cognitive services carry this new information as metadata and give your MAM system new and much more granular methods of managing your media files. This is very important in the process of optimizing the performance and capabilities of your evolving media supply chain.

Revenue and how we can improve revenue are, of course, a driver for the advancement and adaption of cognitive services like for most other technology. And once you are getting familiar with the idea of challenging your common view on what machines can do – the subject of revenue by technology gets even more interesting.

IN WHAT AREAS COULD COGNITIVE SERVICES IMPROVE EXISTING REVENUE STREAMS?

Knowing and understanding the inside of your media opens many new opportunities that can improve revenue and help customers to monetize their owned media assets. The first one that comes to mind is of course “speech to text” – where cognitive services can in best case reach or even exceed the magical benchmark of human understanding (which is roughly at 5% error rate) depending on how purely spoken and what known vocabulary was used with automatic transcribe functionality already today. Automatic speech to text at this level not only free up human resources and saves money otherwise spent on external subtitling services, but also enables a new layer of time based metadata where you actually can navigate in time to find deep linked subjects, names and topics by simply searching the contents of your subtitling in your MAM systems accurate search capabilities and in our case powered by Elastic Search. And this is of course just one of many examples.

It is important to understand the value of temporal metadata since captured reality stored into the video (and audio) file changes every 30-60 frames per second or more – and because of temporal metadata we are able to define accurate time spans for different video and audio content detected by cognitive services. A post house ingesting reality content normally uses human resources for logging and preparing projects for the editors. In these and similar production workflows, the challenge is the huge amount of incoming raw footage that needs to be sorted and presented for the editors. Turnaround times needs to be as short as possible while maintaining accuracy.

Being able to automatically detect actors, environments, speech and other image and audio contents will speed up not only the ingest work but most likely also the editing – and the quality of the final product.

SO, WHERE DO WE START? WHAT IS NEEDED FROM A MAM SYSTEM TO MAKE USE OF COGNITIVE SERVICES?

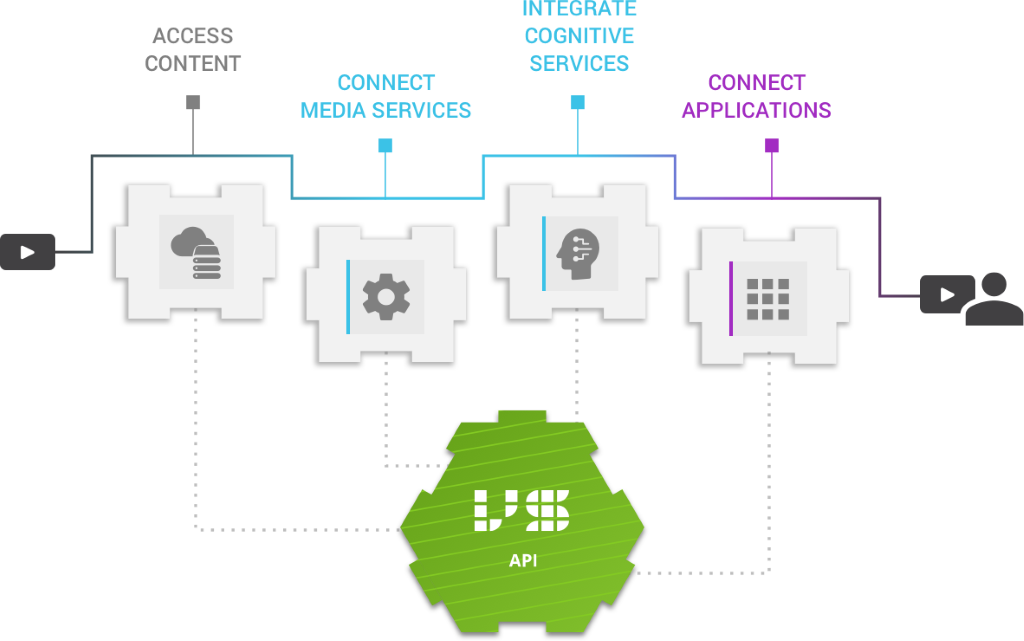

There needs to be a unified service approach in place within the MAM architecture. There are a growing number of cognitive service providers and a MAM system needs to be able to not only make room for additionally layers of temporal metadata but also conform cognitive metadata from many different providers into a common structure. This is important, because there will be different trained models for different purposes, and you of course want to use and combine cognitive services from different providers to improve your media supply chains capabilities and performance.

In VidiCore API we have defined a standard structure for the cognitive metadata from different providers. Because of this, customers don’t have to care about how to model and integrate the different metadata results coming back from different providers

SO VIDISPINE IS NOT ONLY MANAGING AWS AI SERVICES?

No, and that is something I really want to emphasize here. The VidiCore API architecture is completely agnostic to any cognitive services we implement and there are a number of providers, for example Valossa and other preferred partners that is already in preparation.

Cognitive services are often referred to as AI, but a better reference is probably Deep Learning which is a subset of Machine Learning. The history of Machine Learning starts as early as 1943 when computer scientists Walter Pitts and Warren McCulloch used the neural networks of the human brain to create a computer model for mimicking the “thought process” behind learning by trial and error. Walter Pitts and Warren McCulloch called this combination of algorithms and mathematics “threshold logic”. Today we refer to this as the confidence value.

This method proved very successful and forms the fundaments of today’s machine learning algorithms.

It is important to understand the technical background of this technology since the capabilities evolves over time as the algorithm is trained on more and more material. As an example, AWS speech recognition today is better than a year ago and will be even better a year from now.

IN THE API 5.0 THE AWS AI SERVICES ARE ENABLED IN VIDICORE API, WHAT FEATURES DO WE HAVE SO FAR IN THE PRODUCT?

The VidiCore API platform in itself has the advantage of being prepared to integrate with any number of cognitive services and suppliers. First out, we have integrated VidiCore API with Amazon Rekognition and Amazon Transcribe. A user in VidiNet will select the one of these AWS AI services and add the service to the current media supply chain. To be able to integrate many small cognitive services and orchestrate them together is likely the best strategy here, and this is why Vidispine will be the perfect platform for any media supply chain relying on cognitive services today and in the future.

Being able to add more cognitive services as they appear in VidiNet is of course very important since this will require no additional custom integration with the current media supply chain in use. VidiNet takes care of the integration layers in the Vidispine database. Also, any requirement on scaling performance for analyzing large batches of media is managed by the built-in native cloud architecture of VidiNet.

If you suddenly need to analyze 1000 files instead of 100 in a limited time span VidiNet will scale your performance to meet this new requirement.

WHAT KIND OF WORKFLOW DO YOU PREDICT TO BE THE MOST COMMON IN THE NEAR FUTURE?

It isn’t very easy to point to a specific workflow, really. Besides the actual suspects like speech to text and face recognition I see the advantages more from a media supply chain perspective where business and content intelligence come together. Customers in the future will recognize the importance of service agnostic basic metadata extraction that offers various types of cognitive recognition models while being able to unify all this metadata into a single MAM system and media supply chain to drive business intelligence. In addition, I believe that, especially in the field of computer vision, there should be a simple way of training your own current and regional concepts with just few examples (training data) integrated in the MAM directly. How this could look like and what we are experimenting with in this respect is beyond the scope of this issue and may be the subject of another one.

And once all necessary time accurate information are available we are able to build value added services on top that span from content intelligence like search and monetize content, content recommendation due to genealogy pattern, (real time) assistance systems due to rights ownership or recommendations while cutting due to target program slots based on rating prediction, content compliance to automatic highlight cuts of content, domain specific archive tagging packages, similarity search in respect to owned licenses, and much more.

Customers will start to find their own appliances and use cases for cognitive services. And there is growing numbers of cognitive serves providers out there at the same time with unique or overlapping functionality.

This is why the customized metadata architecture combined with centralized domain transformation from different metadata providers in VidiCore API and the cloud native VidiNet service portal becomes the obvious media supply chain of the future.

HOW DOES IT WORK?

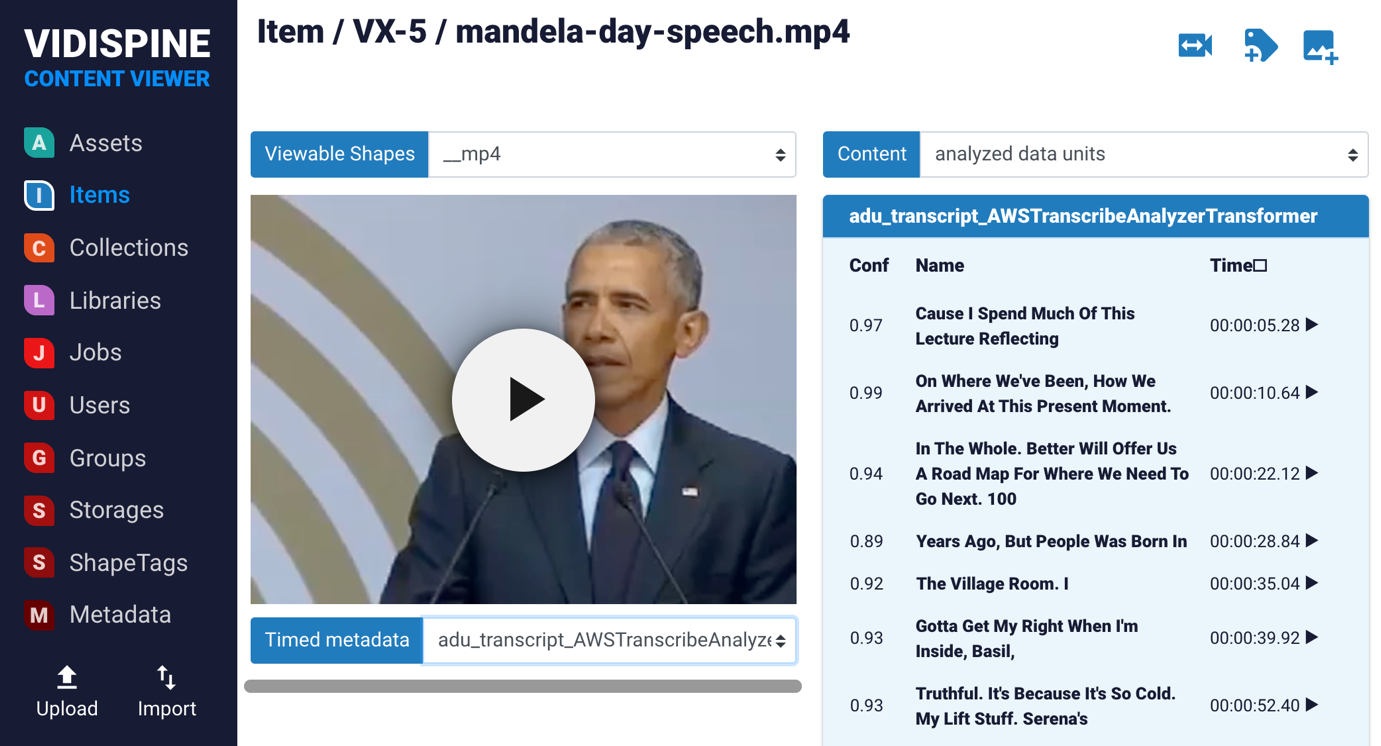

Let´s take a look at the user interface of VidiNet and how the AWS AI services can be used.

After adding the AWS AI services to your Media Supply Chain in VidiNet you are ready to explore the different features.

Vidispine comes with a simple interface, VCV (Vidispine Content Viewer) where developers can test the result of our different services before adding them to production. In below example we are testing the Amazon Transcribe results on a test clip of former President Obama. It is clear that we will not be able to fully trust any speech transcribe service yet, but you can immediately see that the accuracy is pretty high. A human intervention will there for only need to adjust a smaller part of the actual transcription.

Amazon Rekognition is applied the same way. By analyzing your content you will get time-based metadata back with information on objects and faces recognized in your media.

Written by